If you run a Blogger site and want better control over how search engines crawl your pages, learning how to generate custom robots.txt is a smart move. A well-crafted robots.txt file tells search engines exactly which parts of your blog they should access — and which ones to skip. Done right, it can help you improve your blog’s visibility, speed up indexing, and protect sensitive pages from getting crawled.

In this guide, you’ll learn seven reliable ways to generate a custom robots.txt for Blogger, whether you’re a total beginner or an advanced user.

Why a Custom Robots.txt File Matters for Blogger SEO

What Does Robots.txt Do for Your Blog?

Think of the robots.txt file as a traffic cop for search engines like Google, Bing, and others. When their bots land on your site, this file is the first thing they check. It tells them which URLs to crawl and which to skip — helping you control how your site appears in search results.

The Risk of Using Blogger’s Default Robots.txt

Blogger automatically creates a generic robots.txt file for every new blog, but here’s the catch: this default version often lacks fine-tuned rules for SEO. It might block important pages or allow the crawling of unnecessary ones, which can waste your crawl budget and hurt your site’s ranking potential.

Why Customization Can Improve Search Rankings

When you generate custom robots.txt for your blog, you take full control of your site’s crawl behavior. You can allow bots to prioritize your key content while blocking admin pages, archives, or low-value URLs. This boosts your website’s crawlability and indexability — two essential pillars for any successful SEO strategy. (Related: Website Crawlability)

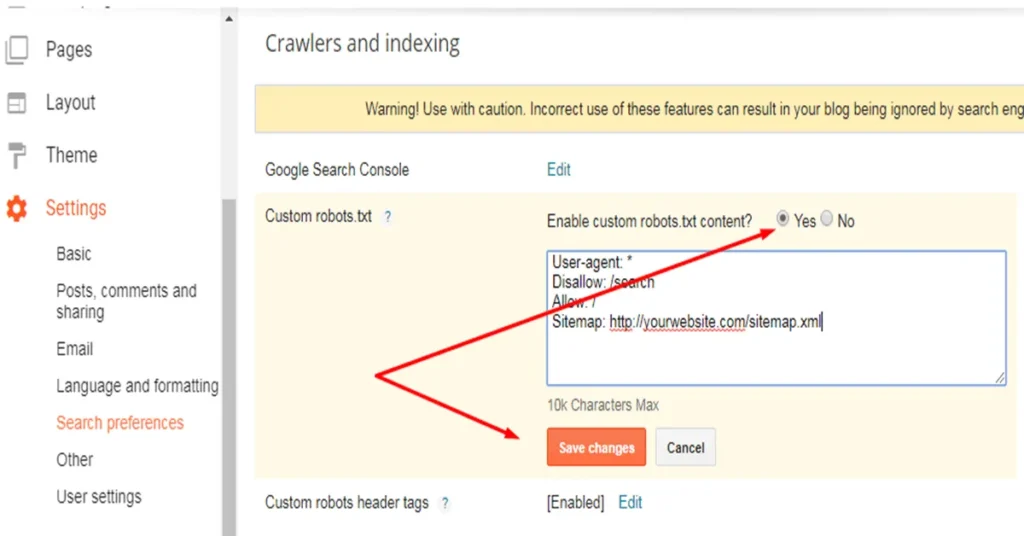

Step 1: Use Blogger’s Built-In Custom Robots.txt Editor

How to Enable and Access the Editor

Blogger offers a straightforward way to add custom robots.txt rules. To activate it:

- Go to Settings in your Blogger dashboard.

- Scroll to Crawlers and Indexing.

- Toggle on Enable custom robots.txt.

- Click Custom robots.txt and paste your rules.

This feature is ideal for users who want direct control without external tools.

Sample Custom Robots.txt Template for Blogger

User-agent: *

Disallow: /search

Allow: /

Sitemap: https://yourblog.blogspot.com/sitemap.xmlThis example blocks search results pages (which are usually thin on content) while allowing the rest of your blog to be indexed.

Step 2: Generate Robots.txt Using Online Tools

Top Free Robots.txt Generators for Blogger

If you prefer ready-made solutions, online tools can help. One reliable option is the Free Robots.txt Generator for Blogger. This tool allows you to create SEO-friendly rules in just a few clicks, especially if you’re not confident writing code.

How to Customize Disallow and Allow Rules for Blogger

When using an online generator, you can specify:

- User-agent (e.g., Googlebot, Bingbot).

- Disallow paths for sensitive or irrelevant pages.

- Allow rules for important URLs.

- Add your sitemap URL for better indexing.

Example Output for a Blogger SEO-Friendly Robots.txt

User-agent: *

Disallow: /search

Allow: /

Sitemap: https://yourblog.blogspot.com/sitemap.xmlThis tells search engines to avoid search result pages and focus on your posts and static pages.

Step 3: Write Robots.txt Rules Manually (For Advanced Users)

Syntax Basics: User-agent, Disallow, Allow

Here’s a quick breakdown:

- User-agent: specifies which bots the rule applies to.

- Disallow: blocks bots from crawling specific pages.

- Allow: permits bots to crawl certain pages, even within disallowed paths.

Crafting Robots.txt for Optimal Crawling

When you manually generate custom robots.txt, you can fine-tune which directories to block and which ones to allow — based on your SEO strategy. Be sure to balance accessibility with security.

Sample Manual Robots.txt Code for Blogger Users

User-agent: Googlebot

Disallow: /search

User-agent: *

Disallow:

Sitemap: https://yourblog.blogspot.com/sitemap.xmlThis ensures Google can access all valuable content while avoiding clutter like search results.

Step 4: Generate Custom Robots.txt Using WordPress or Other CMS and Adapt It

Why You Can Borrow Robots.txt Structures from WordPress

WordPress sites usually come with properly structured robots.txt examples designed for SEO. While Blogger is different, you can adapt these templates to fit your blog’s URL structure.

Adapting WordPress Robots.txt Samples for Blogger’s Custom Editor

Simply swap out WordPress-specific paths with Blogger’s URL patterns. For example:

For WordPress:

Disallow: /wp-admin/For Blogger:

Disallow: /searchStep 5: Use AI Tools to Auto-Generate SEO-Friendly Robots.txt

Using ChatGPT, Gemini, or Other AI for Robots.txt Code

AI tools like ChatGPT can help you write clear and effective robots.txt rules. Just describe your needs, and the AI can generate custom Robots.txt code for you.

For example, you can say:

"Generate custom robots.txt for a Blogger blog that blocks search result pages and includes my sitemap."Benefits of Using AI to Avoid Common Mistakes

AI helps you spot syntax errors, prevent overblocking, and save time — especially useful if you’re not familiar with robots.txt syntax.

Step 6: Validate Your Robots.txt Using Google’s Tester

How to Detect and Fix Syntax Errors

Before publishing, test your file using Google’s Robots.txt Tester. This tool highlights syntax errors so you can correct them before going live.

How to Test Crawling Rules Before Publishing

Once your file passes syntax validation, use Google Search Console’s URL Inspection Tool to see if specific pages are allowed or blocked based on your robots.txt.

Step 7: Submit and Monitor Your Robots.txt in Google Search Console

How to Submit After Updating

Once you’ve updated your custom robots.txt, submit your sitemap and check for any crawlability issues in Google Search Console. This helps ensure your new rules are picked up quickly.

How to Monitor Crawling Behavior Using Google Search Console

Google Search Console lets you monitor how search bots interact with your site, spot blocked resources, and fix errors before they impact your rankings. (Related: Website Indexability)

Troubleshooting Robots.txt Warnings or Errors

If you notice crawl warnings, review your file in Google’s tester. For deeper issues, audit your canonical URLs too (Related: Remove Canonical URL) to avoid conflicting signals.

Bonus: Best Practices for Maintaining a Healthy Robots.txt File

When to Update Your Robots.txt for New Pages

Every time you launch new sections or redesign your blog, revisit your robots.txt to ensure it’s still aligned with your content strategy.

Why You Should Audit Robots.txt After Major Site Changes

A site redesign or URL structure update can accidentally block important content. Routine audits help you avoid unexpected drops in your search rankings.

Common Robots.txt Mistakes and How to Avoid Them

- Blocking “/*” unintentionally (which blocks everything).

- Forgetting to update your Sitemap URL.

- Disallowing important folders like /images or /posts that help SEO.

Final Thoughts

There’s no one-size-fits-all formula for robots.txt, but now you’ve got seven practical ways to generate custom robots.txt for Blogger — from manual writing to online generators and AI-powered solutions. Whether you choose the built-in editor, free tools like TechyLeaf’s robots.txt generator, or validate your file with Google’s official guide, the right setup can help search engines crawl your blog the smart way.

A well-optimized robots.txt is never a “set it and forget it” deal. As your blog grows and your content strategy evolves, make sure you revisit your file regularly to avoid blocking valuable pages or wasting crawl budget. Smart SEO is about staying proactive — and your custom robots.txt is one part of that puzzle.